In terms of technological development, we may still be at least a couple of decades away from having truly autonomous, intelligent artificial intelligence systems communicating with us in a genuinely “human-like” way.

But, in many ways, we’re progressing steadily towards this future scenario at a surprisingly fast pace thanks to the continuing development of what is known as automated speech recognition technology. And at least so far, it’s looking to promise some truly useful innovations in user experience for all sorts of applications.

Automatic Speech Recognition or ASR, as it’s known in short, is the technology that allows human beings to use their voices to speak with a computer interface in a way that, in its most sophisticated variations, resembles normal human conversation.

The most advanced version of currently developed ASR technologies revolves around what is called Natural Language Processing, or NLP in short. This variant of ASR comes the closest to allowing real conversation between people and machine intelligence and though it still has a long way to go before reaching an apex of development, we’re already seeing some remarkable results in the form of intelligent smart phone interfaces like the Siri program on the iPhone and other systems used in business and advanced technology contexts.

However, even these NLP programs, despite and “accuracy” of roughly 96 to 99% can only achieve these kinds of results under ideal conditions in which the questions directed at them by humans are of a simple yes or no type or have only a limited number of possible response options based on selected keywords (more on this shortly).

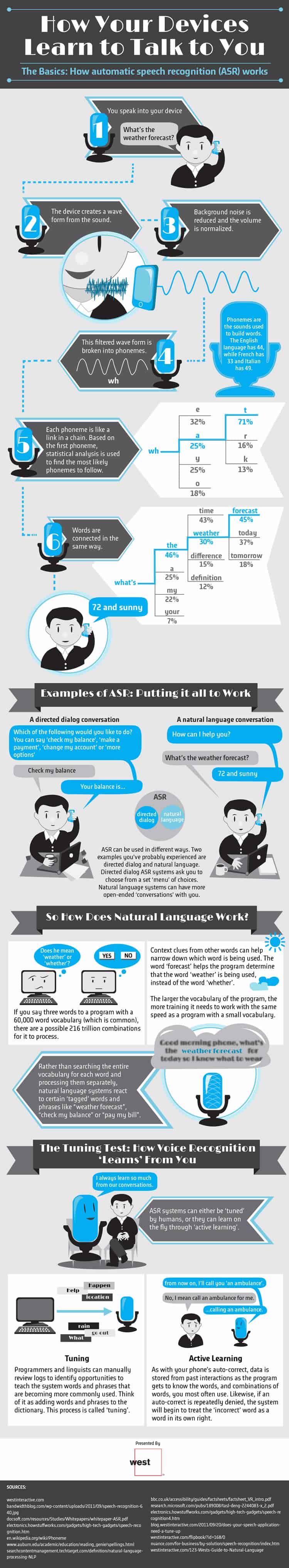

Now that we’ve covered the wonderful future prospects of ASR technology, let’s take a look at how these systems work today, as we’re already using them.

A lot of the information we’re about to cover is also explained in considerable, highly visual detail by the complementary infographic created by the ASR software professionals at West Interactive. You should really also take a look at their post here.

A Basic Primer on How Automatic Speech Recognition Works

The basic sequence of events that makes any Automatic Speech Recognition software, regardless of its sophistication, pick up and break down your words for analysis and response goes as follows:

- You speak to the software via an audio feed

- The device you’re speaking to creates a wave file of your words

- The wave file is cleaned by removing background noise and normalizing volume

- The resulting filtered wave form is then broken down into what are called phonemes. (Phonemes are the basic building block sounds of language and words. English has 44 of them, consisting of sound blocks such as “wh”, “th”, “ka” and “t”.

- Each phoneme is like a chain link and by analyzing them in sequence, starting from the first phoneme, the ASR software uses statistical probability analysis to deduce whole words and then from there, complete sentences

- Your ASR, now having “understood” your words, can respond to you in a meaningful way.

Some Key Examples of Automatic Speech Recognition Variants

The two main types of Automatic Speech Recognition software variants are directed dialogue conversations and natural language conversations (the same thing as the Natural Language Processing we mentioned above).

Directed Dialogue conversations are the much simpler version of ASR at work and consist of machine interfaces what tell you verbally to respond with a specific word from a limited list of choices, thus forming their response to your narrowly defined request. Automated telephone banking and other customer service interfaces commonly use directed dialogue ASR software.

Natural Language Conversations (the NLP we covered in our intro) are the much more sophisticated variants of ASR and instead of heavily limited menus of words you may use, they try to simulate real conversation by allowing you to use an open ended chat format with them. The Siri interface on the iPhone is a highly advanced examples of these systems.

How Does Natural Language Processing Work?

Given its importance as the future direction of ASR technology, NLP is much more important than directed dialogue in the development of speech recognition systems.

The way it works is designed to loosely simulate how humans themselves comprehend speech and respond accordingly.

The typical vocabulary of an NLP ASR system consists of 60 thousand or more words. Now what this means is over 215 trillion possible word combinations if you say just three words in a sequence to it!

Obviously then, it would be grossly impractical for an NLP ASR system to scan its entire vocabulary for each word and process them individually. Instead, what the natural language system is designed to do is react to a much smaller list of selected “tagged” keywords that give context to longer requests.

Thus, using these contextual clues, the system can much more quickly narrow down exactly what you’re saying to it and find out which words are being used so that it can adequately respond.

For example, if you say phrases like “weather forecast”, “check my balance” and “I’d like to pay my bills”, the tagged keywords the NLP system focuses on might be “forecast”, “balance” and “bills”. It would then use these words to find the context of the other words you used and not commit errors like confusing “weather” with “whether”.

The Tuning Test: How ASR is made to “Learn” from Humans

The training of ASR systems, be they NLP or directed dialogue systems, works on two main mechanisms. The first and simpler of these is called Human “Tuning” and the second, much more advanced variant is called “Active Learning”.

Human Tuning: This is a relatively simple means of performing ASR training. It involves human programmers going through the conversation logs of a given ASR software interface and looking at the commonly used words that it had to hear but which it does not have in its pre-programmed vocabulary. Those words are then added to the software so that it can expand its comprehension of speech.

Active Learning: Active learning is the much more sophisticated variant of ASR and is particularly being tried with NLP versions of speech recognition technology. With active learning, the software itself is programmed to autonomously learn, retain and adopt new words, thus constantly expanding its vocabulary as it’s exposed to new ways of speaking and saying things.

This, at least in theory, allows the software to pick up on the more specific speech habits of particular users so that it can communicate better with them.

So for example, if a given human user keeps negating the autocorrect on a specific word, the NLP software eventually learns to recognize that particular person’s different use of that word as the “correct” version.

Want to learn more?

Want to get an industry-recognized Course Certificate in UX Design, Design Thinking, UI Design, or another related design topic? Online UX courses from the Interaction Design Foundation can provide you with industry-relevant skills to advance your UX career. For example, Design Thinking, Become a UX Designer from Scratch, Conducting Usability Testing or User Research – Methods and Best Practices are some of the most popular courses. Good luck on your learning journey!

(Lead image: Depositphotos)