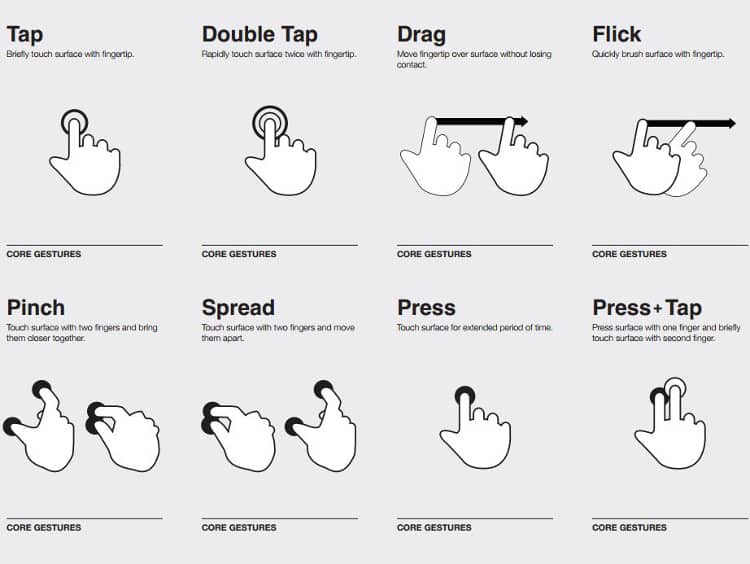

The role of gesturing has been evolving and adapting on the mobile spectrum for a decade. As most would understand it, a gesture is a command input by touch onto a touch enabled screen or mouse pad. The term arguably entered the mainstream with Apple’s trackpad and input commands.

With 12 standard gesture commands on desktop and 8 for mobile, gestures solve unique navigational problems for UI across platforms.

Just as gestures in real life are not limited to the hands, so too have developments in gestures encountered limitations. Samsung’s ?Galaxy S4? came with the functionality to track a user’s eye as it shifts to different positions on the page. As it moves lower, the page scrolls down. In this regard it may not seem like a gesture, but indeed it qualifies as an augmented method of navigation. The way Samsung did this was to use the front facing camera to identify and respond to general patterns of vision (particularly while reading top down). As one can imagine this results an increase in power usage because the camera is constantly on. In addition its reliability is relatively low compared to just using your finger, especially since it is right there while holding the device. It’s sort of a solution that didn’t necessarily solve a problem.

Screen size is a serious variable in a consumer’s decision to buy a certain device. It also presents a nightmare for designers when they create an application as it needs to run across different-sized devices on different OSs. Very often, this results in the deployment of a tablet application and a mobile application, which can alter gesture usage and implementations. The question that has been asked countless times before, “how big is too big?” Well, phablets have ranged in size and the market has slowly increased in favor of larger screen sizes.

The UX does suffer from an inability to reach all parts of the screen with one hand. Sales of phablets are near 230 million units worldwide according to ?Forbes?. What may change soon is the area around the device – as this may become part of the gesture navigation. It is unlikely people will carry tablet-sized phones in their pockets. So, adapting to the phone’s hardware capacity may serve as a solution to innovative functionality. The difference in screen size between an iPad Mini 3 and the iPhone 6+ is 1.9 inches. It can be considered a gray area of usability.

Addressing the Issue using In-Air Hand Gestures

The screen-size limitation has triggered a number of innovative approaches especially from research institutions aimed towards addressing this challenge. One such recent approach is to optimize hardware that is already in mobile devices having a GSM receiver to interpret primary commands. A 2014 study out of the University of Washington has developed a system to input commands using “inair hand gestures.” They call it a novel system, but many engineers know this is more revolutionary than novel.

The study, called “SideSwipe: Detecting In-air Gestures Around Mobile Devices Using Actual GSM Signals” reported that the “system leverages the actual (unmodified) GSM signal to detect hand gestures around the device. We developed an algorithm to convert the bursty reflected GSM pulses to a continuous signal that can be used for gesture recognition. Specifically, when a user waves their hand near the phone, the hand movement disturbs the signal propagation between the phone’s transmitter and added receiving antennas.”

A whole new way to navigate and input commands on mobile devices will prove to be a welcome challenge to designers and developers. If SideSwipe is adapted by manufacturers, the roll out on new applications will probably be on native programs. So if for instance Apple decides to implement this functionality into future iterations of iPhone, it will be a global function across iOS.

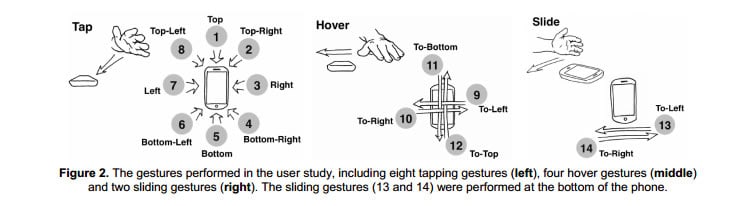

Currently there are 13 inputs the engineers at University of Washington have identified that would work with GSM devices:

So with the phablet cap at around six inches, developers and designers may remove many buttons that are considered to be clutter on the screen itself. Changing tabs on the browser to flipping through photos may be able as simple as waving your hand.

One of the concerns is voltage regulation. The engineers address this by normalizing the variable fluctuation of power to the GSM antenna. On this issue the same study reported “The GSM system usually adjusts its transmitting power to maintain a stable gain in a fluctuating environment and in turn affects the receiving power density perceived by our antenna array … Such fluctuation is undesirable in gesture recognition, as two signal patterns of the same gestures may appear completely different. To adapt to this fluctuation, we normalize the received data corresponding to the transmitting power. “

Systematically, there is little to add to the device. That is what really tests the boundaries of innovation, and in terms of gestures the technology for really optimizing it’s potential has been in users hands this whole time.

Want to learn more?

Want to get an industry-recognized Course Certificate in UX Design, Design Thinking, UI Design, or another related design topic? Online UX courses from the Interaction Design Foundation can provide you with industry-relevant skills to advance your UX career. For example, Design Thinking, Become a UX Designer from Scratch, Conducting Usability Testing or User Research – Methods and Best Practices are some of the most popular courses. Good luck on your learning journey!

(Lead image: Depositphotos)